EveryPoint – reality capture with the iPhone Pro

It’s been a long time coming, but we are about to enter an era where we will all have the ability to quickly and easily make 3D reality captures of environments or objects using the phone in our pockets. Martyn Day explores EveryPoint

The LiDAR (Light Detection and Ranging) laser scanning market moves at a glacial pace. For years, it was hoped that the price of laser scanners would drop to below $12,000 to democratise reality capture. Typically, laser scanners have cost $40,000 and have been large and heavy devices. And for long range scanning, this is still the reality. When range isn’t such an issue, there has been some movement.

In 2010 there was a significant release from Faro with the Focus3D at $20,000, which qualified for hand luggage on a plane. Then in 2016 Leica launched the BLK360, which could fit in a handbag. This brought the price to scan down to $15,000, but that’s pretty much still the lowest entry point for professional laser scanners.

While we have been waiting for vendors to move from the top, down to the entry level, similarly there have been multiple attempts to enter at the low end and move up.

Microsoft’s Kinect for the Xbox was a low cost, low resolution structured light scanner, which served as the basis for a lot of research into portable and lightweight scanning solutions based on the exploding tablet market.

This small format, low power technology developed rather slowly and had trouble gaining traction when brought to market. The solutions we saw at AEC Magazine were all best suited to scanning individual objects or small areas as opposed to buildings or whole interiors.

Different bits of mobile hardware came and went, none really leaving any impression, even when firms the size of Intel got involved. Then, Apple decided to include a small structured light sensor in its high-end iPhone and iPad products.

One of these was for face recognition and security purposes, while the rear facing LiDAR scanner was there for developers to mainly experiment with Augmented Reality (AR) apps and, for some time in the future when Apple realises its much-vaunted AR Glasses.

Apple’s sensor is not high-fidelity, and the depth data just doesn’t offer the resolution needed for detailed 3D scanning. On the face of things this didn’t appear to be a platform for professional AEC solutions.

While the hardware side of reality capture was developing slowly, software processing applications were rapidly refining image-based photogrammetry. Here, multiple photos, or videos of an object or scene, are processed to identify the position of the camera relative to the object and can then compute 3D models from many contrasting views.

By 2017, photogrammetry was easily rivalling laser scans for accuracy. This not only challenged the established hardware laser capture market, but also enabled anything with the ability to take photographs to be a reality capture ‘scanner’.

Now if only we all had a device which could capture video and / or photographs, and perhaps had some basic scanning capability, I wonder what would be possible?

Photogrammetry meets LiDAR

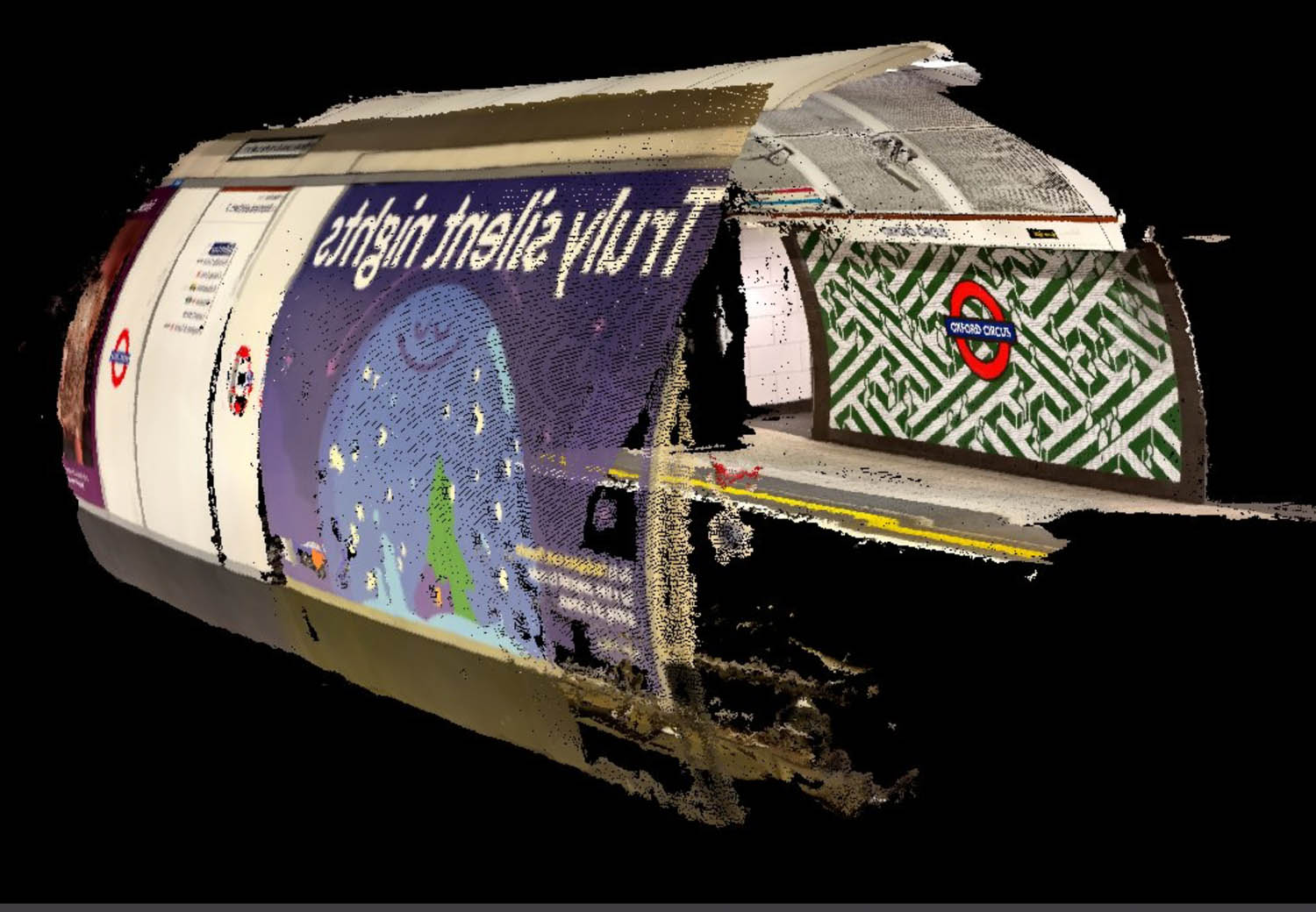

EveryPoint combines photogrammetry techniques with the iPhone Pro’s LiDAR scanner to fill in the gaps, as well as utilising the positional / accelerometer data from the Apple AR toolkit. When combined, this delivers colour 3D models that are said to be more accurate than simply using the iPhone’s LiDAR scanner alone.

EveryPoint is not an end user product in itself, but a technology layer on which developers can create their solutions, in construction and other industries.

The origins of EveryPoint go back to a US firm called Stockpile Reports which set up back in 2011. The company based its business on helping firms locate and estimate inventory (stockpiles) to help operations and financial control.

Using photogrammetry from installed (fixed cameras), hand-held devices (originally on the iPhone 4s) or drones, Stockpile Reports delivers dashboard reporting on material stored around a building site, indoors or at remote locations.

The software automates real time volumetric analysis of materials and provides tracking and verification. Customers don’t get a 3D model, just the result of how much material is there and a contour map. All the inventory can be linked to a company’s ERP system completing the material loop.

CEO David Boardman admitted that the company was a little slow in incorporating drones into their solution, so when new technologies came along, which might disrupt their core market, they vowed to evaluate them much earlier on. The Apple iPhone, with its lowcost scanner and cameras, was an obvious target platform.

The US firm’s early experiments with the built in LiDAR scanner proved that it wasn’t industrial strength, but there were a number of on-board systems that, when combined, could deliver interesting results.

“Apple gives us all kinds of delicious data that helps our pipeline. Even if using the [Apple] AR kit, even without the sensor on an iPhone 12, I still get estimated camera poses from AR kit.

“Apple AR toolkit provides visual odometry, accelerometer data, gravity sensor data – there’s about six different sensors that go into estimating camera pose. We can compare our in-house camera, track with Apple’s and make it even more accurate and robust. It’s just incredible sensor fusion.”

As of yet, the EveryPoint toolkit does not support Android devices – and there are very good reasons for this from a developer’s point of view. With Android, there are many different manufacturers, with many different devices, with many different specifications. It’s almost impossible for the developers to cater to every camera and physical variation.

Because Apple makes a handful of LiDAR capable devices, it’s easy for EveryPoint to work on a stable platform, where the core physical information required for photogrammetry calculations is constant from device to device, as Boardman explains, “An iPhone is an iPhone is an iPhone. Even the same phone generation can have different series of glass in the manufacturing process. If you’re trying to optimise and calibrate for the lenses and all that stuff, it’s just a nightmare to try to do something really accurate and precise in the Android world.”

Real world applications

Applications based on EveryPoint are now starting to come out, the first being Recon-3D for scene of crime and forensics. One could imagine when a first responder arrives at the scene of a crime, the application could be used to quickly capture a 3D scene before anything is touched.

EveryPoint is already being used by some very large AEC developers to build applications, so fingers crossed we’ll see some of those soon.

Many architects we talk to share frustrations at getting quick surveys done for big refurbishment projects like airports or hospitals. So, could EveryPoint technology offer a new near instant approach?

Boardman certainly thinks so. “It’s absolutely possible,” he says. “It’s not there yet, someone will have to put some muscle to pull the right parts together. But again, using EveryPoint, you could send fifty people running through the airport with iPhones to get all that data into EveryPoint and then kick off the process to turn all into one unified model of the airport.”

Boardman told us that one firm beta testing the software had already swapped out their BLK360s to move to a solution using iPads. Leica’s BLK is accurate to 6mm at 10m and 8mm at 20m, so this could be the ballpark accuracy to expect.

Conclusion

Apple has been putting LiDAR sensors in the iPhone (12 Pro) in 2020. This year it will release the iPhone 14. In these two years, there has been relatively little use of the LiDAR sensor and Apple’s Augmented Reality glasses are still some way off. By combining photogrammetry with point clouds and the AR toolkit, EveryPoint seems to have created a technology layer which will revolutionise the way data can be captured on site, reducing the need to use special and expensive equipment and using a device that almost everyone has.

It won’t be too long before snag lists come with 3D models, or verification issues on site can be captured and sent to the design teams for comparison against the original model.

EveryPoint has yet to publish its pricing model, but this would be included in whatever service charge a developer builds on top of their application. Boardman explained that the cloud portion will be configurable for Google, Amazon, Apple and can even be integrated with Nvidia.

Boardman thinks that in the future, everything that we’re looking at is going to be digitised every second of every day from lots of different sensors. With all these sensors capturing the world 24/7, we asked Boardman if the ultimate goal could be to have an automatic live Digital Twin being built and changed.

“If I were Facebook, or a satellite company, I’d be starting with the planet first,” he says. “We are going from the bottom into spaces people care about – ready mix plants, quarries, railyards. We’ll start getting more and more and more and maybe someday we will be blessed to cover the entire planet. But in the meantime, we might just cover stops in the supply chain for a bulk materials company and we have digitised 1,000 of their locations and across their customer sites to the rail yards to their shipyards.”

Nvidia’s ‘Instant NeRF’

Jonathan Stephens, the chief evangelist and marketing director at EveryPoint has been experimenting with Nvidia’s ‘Instant NeRF’ (neural radiance fields) developer tools. This is code which Nvidia has put on GitHub to allow Windows users to run neural network code on the CUDA cores of Nvidia GPUs. This powers neural radiance fields to generate and render photogrammetric models, really fast. This means it can take the process of capturing and getting a high quality rendered model down to minutes. It’s worth connecting with Jonathan on Linkedin and twitter (@EveryPointIO) to see his posts on the results from his experimental work.

The post EveryPoint – reality capture with the iPhone Pro appeared first on AEC Magazine.

Powered by WPeMatico